AI Law & Innovation Institute

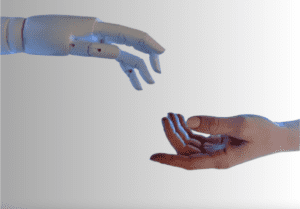

Law moves at human speed. AI moves at quantum speed. Our mission is to help courts, lawmakers, and administrative agencies adapt.

New technologies are shaping the future. Government and legal institutions must evolve or be left behind. We bring together scholars, policymakers, and industry leaders to propose

frameworks for responsibly encouraging and managing the effects of innovation.

Working together, we can forge the best path forward.

The explosion of AI onto the scene provides exciting new, high-stakes challenges. Attention has focused on the disruptive potential of AI. But there is a gap between

theoretical explorations and legal realities. UC Law is uniquely situated to bridge that gap, helping legal regimes facilitate innovation while circumscribing disruption.

The Institute focuses on the structures—the DNA—of legal processes. How can core legal systems—such as expert agencies and litigation—be enhanced? Where do we need additional structures or expertise?

Beyond AI, the next decade will bring challenges from robotics, synthetic biology,

genetic engineering, and more. The Institute (fondly known as “Allie”) will help courts and agencies adapt to all these fast-paced technologies.

Robin’s Rules of Order for AI

A series of reflections by Professor Feldman providing principles for how to think about developments in AI.

Government Activities Related to AI

For almost a decade, Professor Feldman and C4i have provided guidance on AI to government institutions, including:

- Technical advice to congressional committees and state officials on regulation of AI

- The Army Cyber Institute’s threat casting exercise on Weaponization of Data

- The GAO’s Artificial Intelligence Roundtable report to Congress on the future of AI

- The United Nations (address delivered to the 2023 General Assembly Science Summit on the impact of AI, delivered by Chancellor David Faigman)

- The Federal Trade Commission’s hearing on Emerging Competition, Innovation, and Market Structure Questions Around Algorithms, AI, and Predictive Analytics

- The US Patent & Trademark Office’s Listening Session on Patents and AI Inventorship

- The National Academies’ Workshop on AI and Machine Learning to Accelerate Translational Research, for the Government-University-Industry Research Roundtable

- The National Academies’ Workshop on Robotics and AI

Selected Publications by Professor Robin Feldman

Competition at the Dawn of AI (2019), proposing that for AI-generated works, companies should receive a shorter period of protection, enforced through the context of regulatory approval, in exchange for openness to the regulatory agency. This is modeled partially after FDA data rights for pharmaceuticals.

Highlights three potential issues with patenting AI-generated inventions:

- Timeline: A 20-year patent is an eternity for AI. When it comes to the speed of change, AI travels in an entirely different dimension.

- Transparency: Where the invention calls for a method patent—for example, a method of using an AI to determine when a car hits the brakes, or whether an applicant will receive a loan—the limited disclosure norms in software patent law may not be enough. To gain societal acceptance of AI, policy makers and the public will want someone to look under the hood.

- Collective contribution to creativity: To the extent that AI systems are deriving their creative results, in part, through the collective decisions of numerous people, can the AI’s creativity be attributable solely to the program, or its operator, or its owner?

Argues further that both in terms of the incentives given to people by listing them as the inventor of a patent, and in terms of the susceptibility to deterrence presented by rights controlled by others, it is neither socially desirable nor entirely coherent to list AI on patents.

AI Governance in the Financial Industry; 27 Stanford J.L. Bus. & Fin. (2022)

with former SEC Commissioner Kara Stein

Recommends a structure for the regulation of AI built on three pieces of structural scaffolding:

- Touchpoints: where AI most tangibly interacts with the broader financial system.

- Types of evil: dividing harms inflicted by AI into the categories of the evil you planned, the evil you could have predicted, and unpredictable harms.

- Types of players: identifying actors as users, intermediaries, or creators of AI, and acknowledging that different harms may be reasonably predictable to the AI creator, for example, than to the user, or to actors in other fields.

Artificial Intelligence: The Importance of Trust and Distrust; 21 Green Bag 2d 201 (2018)

Flowing from an Army Cyber-Institute threat casting exercise and published 18 months before COVID-19 emerged in China:

- Hypothesizes a public health emergency arising out of Asia that disrupts the U.S. health care system and creates distrust of government information. (The hypothesized scenario originates from data corruption, not from a biological virus).

- Predicts that some US sub-populations will look for other sources of information that are not uniformly reliable or of the best intentions.

- Stresses that some US sub-populations will look for other sources of information that are not uniformly reliable or of the best intentions.

- Proposes that “AI systems should be subject to review entirely outside the system itself – either industry bodies or public bodies. As an average citizen, I may never understand how a biologic interchangeable is being produced, at least not enough to trust that the drug is safe. Nevertheless, I might trust the FDA. This form of institutionalized outside review, whether by private or public entities, will be essential for adequate trust and distrust.”

Artificial Intelligence in the Health Care Space: How We Can Trust What We Cannot Know; 30 Stanford L. & Pol’y Rev. 399

Suggests that “the pathways we use to place our trust in medicine provide useful models for learning to trust AI. As we stand on the brink of the Al revolution, our challenge is to create the structures and expertise that give all of society confidence in decision-making and information integrity.”

Proposes that a government body could create and regulate standards to:

- Document a dataset’s purpose, intended use, potential misuse, and areas of ethical and legal concern.

- Provide information integrity requirements for accuracy, completeness, and archival purposes.

- Address when conflicts of interest arise between cost savings from deploying AI and quality of patient/consumer care.

Forthcoming Works:

- Artificial Intelligence and Cracks in the Foundation of IP

(forthcoming in UC Law Review) - AI and Antitrust: The Algorithm Made Me Do It

(forthcoming in Competition J. Antitrust and Unfair Competition Law Section) - Harmonizing AI and IP (forthcoming book)